The Met Police will scale up Live Facial Recognition use after reporting no false arrests and over 900 arrests linked to deployments.

The Metropolitan Police has announced plans to scale up its use of Live Facial Recognition (LFR) technology across London after reporting a year of successful results and no arrests linked to false alerts.

Between September 2024 and September 2025, the force said 962 people were arrested following LFR deployments. In the same period, there were no arrests made as a result of false matches.

According to the Met, 10 people were wrongly flagged by the system during that time. Four were not stopped at all, while the remaining six were briefly spoken to by officers, with each interaction lasting less than five minutes.

Lindsey Chiswick, who leads on LFR for the Met and nationally, described the technology as “a powerful and game-changing tool”. She said that its success in identifying serious offenders was helping to make London safer.

However, human rights campaigners have continued to express concerns about the potential for false matches and the impact on privacy.

A report published by the Metropolitan Police on Friday revealed that, since LFR was first introduced, the technology has been used in operations leading to more than 1,400 arrests in total. Of these, over 1,000 people have been charged or cautioned.

Those arrested included individuals wanted by police or the courts, as well as offenders in breach of court-imposed restrictions such as registered sex offenders and stalkers.

The force added that more than a quarter of arrests from LFR deployments involved suspects linked to violence against women and girls, including offences such as rape, strangulation, and domestic abuse.

Public support for the use of the technology remains strong. A survey carried out by the Mayor’s Office for Policing and Crime found that 85% of respondents backed the Met’s use of LFR to help locate serious and violent criminals, people wanted by the courts, and those at risk of harming themselves.

Despite this, campaign group Big Brother Watch has launched a legal challenge against the Met’s use of the technology. The case is being brought alongside Shaun Thompson, who was wrongly identified by an LFR camera in February 2024.

Speaking to the BBC, Mr Thompson described the experience of being stopped as “intimidating” and “aggressive”.

The Met reported that its LFR system currently has a false alert rate of just 0.0003% from more than three million faces scanned. Following its latest findings, the force said it plans to “build on its success” by increasing the number of deployments each week.

Ms Chiswick said, “We are proud of the results achieved with LFR. Our goal has always been to keep Londoners safe and improve the trust of our communities. Using this technology is helping us do exactly that.

“This is a powerful and game-changing tool, which is helping us to remove dangerous offenders from our streets and deliver justice for victims. We remain committed to being transparent and engaging with communities about our use of LFR, to demonstrate we are using it fairly and without bias.”

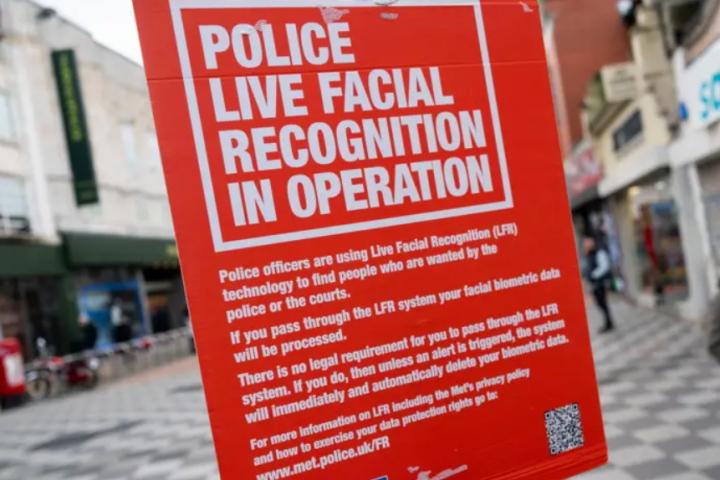

The Met also emphasised that if someone walks past an LFR camera and is not wanted by police, their biometric data is immediately and permanently deleted.